lme4::lmer is a useful frequentist approach to hierarchical/multilevel linear regression modelling. For good reason, the model output only includes t-values and doesn’t include p-values (partly due to the difficulty in estimating the degrees of freedom, as discussed here).

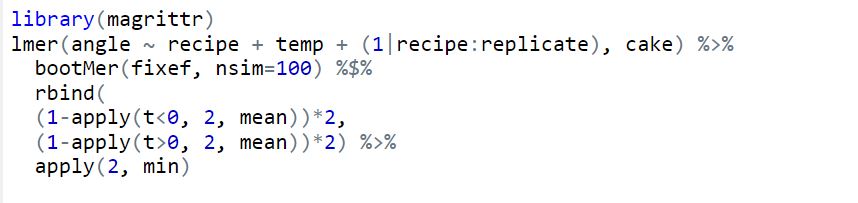

Yes, p-values are evil and we should continue to try and expunge them from our analyses. But I keep getting asked about this. So here is a simple bootstrap method to generate two-sided parametric p-values on the fixed effects coefficients. Interpret with caution.

library(lme4) # Run model with lme4 example data fit = lmer(angle ~ recipe + temp + (1|recipe:replicate), cake) # Model summary summary(fit) # lme4 profile method confidence intervals confint(fit) # Bootstrapped parametric p-values boot.out = bootMer(fit, fixef, nsim=1000) #nsim determines p-value decimal places p = rbind( (1-apply(boot.out$t<0, 2, mean))*2, (1-apply(boot.out$t>0, 2, mean))*2) apply(p, 2, min) # Alternative "pipe" syntax library(magrittr) lmer(angle ~ recipe + temp + (1|recipe:replicate), cake) %>% bootMer(fixef, nsim=100) %$% rbind( (1-apply(t<0, 2, mean))*2, (1-apply(t>0, 2, mean))*2) %>% apply(2, min)

Alternatively, you can use the lmerTest package to get p-values for effects of interest.

Thanks For your interest Jason. Yes you can use lmerTest. Douglas Bates didn’t include what he refers to as “SAS” p-values in the lmer output, as derived using lmerTest, hence the presented bootstrap method.

I’ve moved over to Bayesian methods and will post on mixed models using Stan soon. Thanks again.